The AI OS: Why Orchestration is Becoming the Real Foundation of Practical AI

When the shine wore off the models

When we began building ProdPad CoPilot, I wanted to create the strongest AI system a product team could rely on. Not a novelty, not a demo, but a genuine working partner that could help with strategy, planning, discovery, and decision-making. Early experiments looked promising. With carefully constructed prompts, the models produced impressive results and felt almost limitless.

That impression changed quickly when we applied them to real product work. Once CoPilot touched multi-turn conversations, branching roadmaps, linked feedback, layered context, and large datasets, the performance shifted. The models began to struggle in places where product teams cannot afford mistakes. Research had already warned us about this. Yue Zhang’s survey “Siren’s Song in the AI Ocean” (2023) documents how and why large language models hallucinate and why this behavior becomes more pronounced in complex tasks.

The gap between the demo and the real world was larger than anyone hoped. That gap is what pushed me deeper into the research behind CoPilot. I needed to understand how to make it not just good, but the best operational AI a product team can use. That exploration is ultimately what led to Conductor.

Seeing the limits up close

CoPilot’s earliest prototypes were good teachers. They revealed patterns that were impossible to ignore once you spotted them. The models were excellent at synthesis and language, but they were unreliable at everything that depended on sequence, state, precision, or structure.

I saw the same issues repeat across every experiment.

- Planning broke down when the workflow grew longer or more conditional.

- Memory slipped. Important context faded or was replaced by invented details.

- Branches collapsed into each other because the model attempted to resolve everything in a single straight line.

- Structured tasks delivered inconsistent or incorrect output.

- Multi-step flows drifted over time and lost their original intent.

Agent frameworks expanded these problems by placing even more responsibility on the model itself. Many of these frameworks are built around the ReAct pattern introduced by Shunyu Yao et al. (2022). Their work showed the strengths of interleaving reasoning and acting, but it also illustrated the fragility that emerges when a model is responsible for both at once.

The more we tried to correct the models through prompting, the clearer it became that the issue was architectural, not textual. CoPilot needed a system around the model, not another round of clever instructions inside it.

How I came around to orchestration

The turning point came after months of iteration. I began to see LLMs less as intelligent workers and more as highly capable specialists. They could interpret and generate. They could reason within a window. They could explore and reframe ideas. They simply could not manage a real workflow alone.

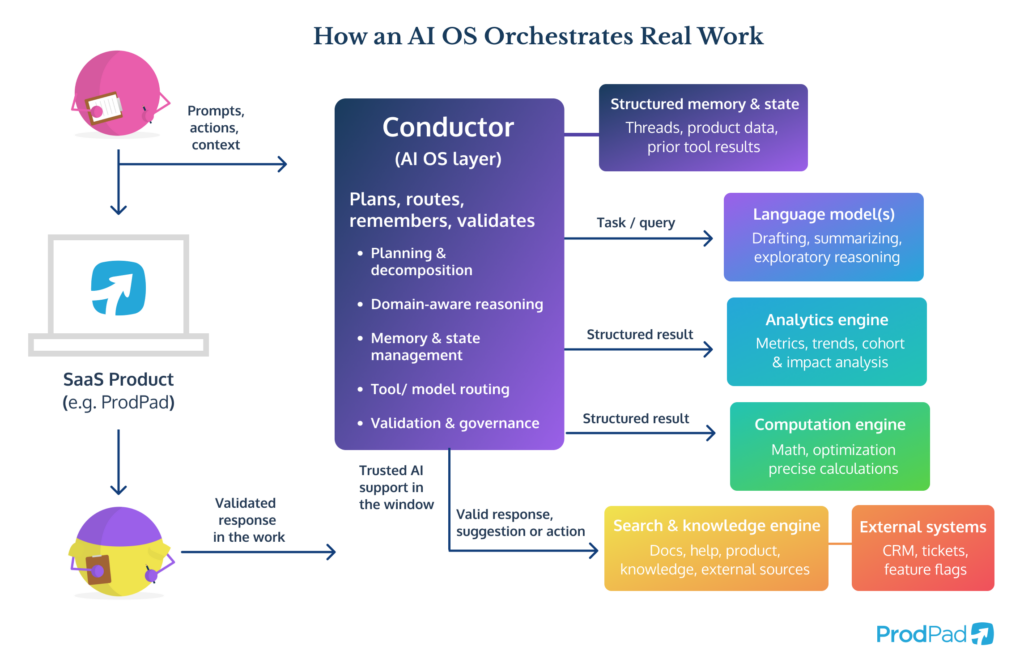

Once that clicked, everything else followed. The model could not be responsible for planning, routing, memory, or execution. It needed an orchestrator that understood the domain, enforced structure, and intervened where the model lacked reliability.

It was reassuring to see research converge toward this same idea. Timo Schick’s “Toolformer” (2023) highlighted how models become more reliable when they call external tools such as calculators or search instead of attempting everything internally. Another example is Mengru Xie’s 2024 paper on workflow decomposition for text-to-SQL, which demonstrated significantly better performance when complex tasks were broken into a planner phase, an execution phase, and a validator rather than handled as a single monolithic prompt.

These studies matched our hands-on experience almost perfectly.

This realization shaped our approach to CoPilot. We built Conductor to provide that missing architecture. It governs the flow of information and ensures CoPilot behaves consistently, even in long, branching tasks that product teams run every day.

What an AI OS actually does

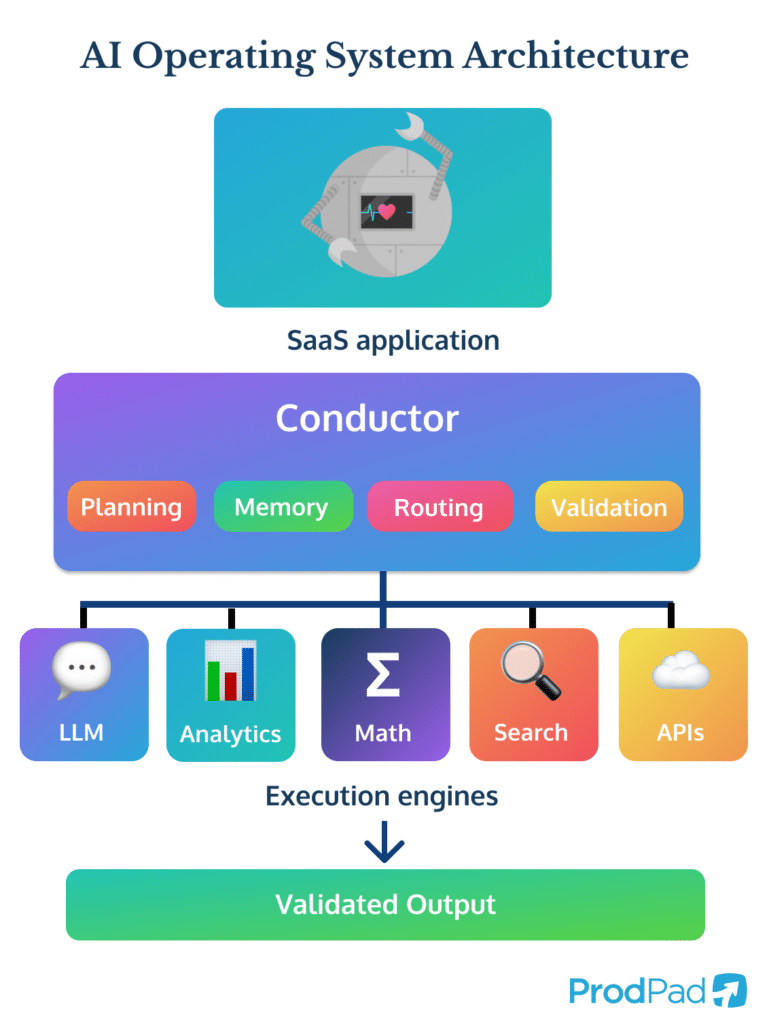

An AI OS provides the scaffolding that production-grade AI has always needed. It surrounds the model with systems that handle the tasks LLMs do not perform well.

- A planner that decomposes the user’s intent

- A router that selects the right tool or model

- A memory layer that retrieves only what is true

- A domain layer that keeps language and concepts grounded

- Execution engines that deliver deterministic outcomes

- Validation that guarantees stability and protects against drift

Once these components are in place, the model can focus on the work it is uniquely good at. This does more than prevent errors. It unlocks workflows that were previously out of reach. CoPilot can now participate in product development cycles with a level of stability that was not possible before Conductor existed.

Why I stopped treating scale as the solution

At the start, I expected larger models to smooth out the problems we encountered. More parameters, more training, more context. Eventually, I realized that scale only improves the parts of the model that were already strong. It does little for the weaknesses that matter most in operational environments.

The results from our research and work in the field aligned with what the emerging literature showed. Systems built from small, specialized components routinely outperform monolithic models when reliability and accuracy matter. They combine the strengths of language models with the strengths of structured tools and deterministic computation.

This is exactly the blend that CoPilot needed.

How Conductor shaped CoPilot

Conductor grew from a simple idea to a working architecture faster than expected because we were building it directly in response to customer needs. Product teams required accuracy, context retention, logic, branching, and stable execution. CoPilot could not meet those needs with LLMs alone.

Conductor provides the missing structure.

- Domain-aware preprocessing

- Structured, explicit context retrieval

- Model and tool selection driven by rules

- Memory management that does not rely on inference

- Intermediate validation

- Workflow governance

- Safe and consistent execution

With Conductor in place, CoPilot became more than a language model interface. It became a dependable product system that can support strategy, backlog development, customer insight analysis, and outcome planning. It now behaves like a tool designed for product teams, not a model doing its best to act like one.

Experience how ProdPad CoPilot applies this architecture to real product workflows.

Where I believe this architecture goes next

The most interesting part of this journey is how universal the pattern is. Conductor was built for product teams, but the architecture it uses can support any domain where reliability and structure matter.

Finance, compliance, engineering, customer operations, research, and strategy all depend on workflows that branch, escalate, loop, and require precision. The AI OS model handles these patterns cleanly.

Recent industry evidence underlines this shift. Reports from early agent deployments, such as Microsoft’s, showed high failure rates and unstable behaviors when models were left to govern themselves inside agent loops. Coverage from Futurism in 2024 highlighted exactly how these failures manifest at scale. DAIR.AI’s analysis of agent brittleness pointed to the same structural issues.

As more companies adopt AI into their processes, the need for this architecture will increase. There is little tolerance for drift or invention in operational environments. The systems that succeed will be the ones that treat the model as a component rather than the foundation.ProdPad CoPilot is already benefiting from this shift, and the broader industry will too.

What I believe teams should take from this

If you are building or evaluating AI systems, the model itself is only one part of the equation. The orchestration layer around it determines whether it will behave consistently, whether it will scale, and whether it will integrate into real work.

This layer is becoming the real differentiator. It is where reliability, speed, and accuracy come from. It is also where innovation will happen over the next decade. Once the architecture is stable, entirely new categories of intelligent tools become possible.Conductor is the first working expression of this belief inside ProdPad. It transformed what CoPilot can do, and it opened doors for work we had not been able to attempt before. I am convinced this approach will define the next generation of AI systems. It finally gives us a way to build AI that teams can trust.

Conductor raised the bar for CoPilot, and improved ProdPad as a whole bringing order to ideas, feedback, and strategy so teams can operate with clarity.